Given 7 Registered Voters, What Is The Probability That 2 Of Them Will Vote In The Election?

The survey literature has long shown that more respondents say they intend to vote than actually cast a ballot (due east.k., Bernstein et al. 2001; Argent et al. 1986). In addition, some people say they practice non expect to vote but actually exercise, perchance because they are contacted by a campaign or a friend close to Election Day and are persuaded to turn out. These situations potentially introduce error into ballot forecasts considering these stealth voters and nonvoters often differ in their partisan preferences. In general, Republicans are more likely than Democrats to turn out, though they may exist virtually equally probable to say they intend to vote. Every bit a consequence, pollsters exercise not rely solely upon a respondent's stated intention when classifying a person equally probable to vote or not. Instead, about ask several questions that collectively can exist used to estimate an individual'south likelihood of voting. The questions measure intention to vote, past voting behavior, noesis virtually the voting procedure and interest in the entrada.

This study examines different means of using seven standard questions, and sometimes other information, to produce a model of the probable electorate. The questions were originally developed in the 1950s and '60s by election polling pioneer Paul Perry of Gallup and have been used – in various combinations and with some alterations – by Pew Enquiry Center, Gallup and other organizations in their pre-ballot polling (Perry 1960, 1979). The questions tested here include the following (the categories that give a respondent a indicate in the Perry-Gallup index, discussed in the post-obit section, are in assuming ):

- How much idea accept you given to the coming November ballot? Quite a lot, some, simply a little, none

- Have yous e'er voted in your precinct or election district? Yes, no

- Would you say you follow what's going on in government and public affairs most of the time, some of the time, merely now and and then, hardly at all?

- How oftentimes would you lot say you lot vote? Always, nearly always, part of the fourth dimension, seldom

- How likely are you to vote in the general election this Nov? Definitely volition vote, probably will vote, probably will not vote, definitely will not vote

- In the 2012 presidential election between Barack Obama and Paw Romney, did things come upwards that kept you from voting, or did yous happen to vote? Aye, voted; no

- Delight charge per unit your take a chance of voting in November on a scale of x to one. 0-8, 9, 10

Some pollsters have employed other kinds of variables in their probable voter models, including demographic characteristics, partisanship and ideology. Beneath we evaluate models that utilize these types of measures as well.

Ii additional kinds of measures tested here are taken from a national voter file. These include indicators for past votes (in 2012 and 2010) and a predicted turnout score that synthesizes past voting beliefs and other factors to produce an estimated likelihood of voting. These measures are strongly associated with voter turnout. A detailed assay of all of these individual measures and how closely each one is correlated with voter turnout and vote choice can be constitute in Appendix A to this written report.

Two broad approaches are used to produce a prediction of voting with pre-election information such as the Perry-Gallup questions or self-reported by voting history (Burden 1997). Deterministic methods utilise the data to categorize each survey respondent as a probable voter or nonvoter, typically dividing voters and nonvoters using a threshold or "cutoff" that matches the predicted rate of voter turnout in the election. Probabilistic methods use the aforementioned information to compute the probability that each respondent will vote. Probabilities tin can be used to weight respondents by their likelihood of voting, or they tin can be used as a footing for ranking respondents for a cutoff arroyo. This analysis examines the effectiveness of both approaches.

The Perry-Gallup likely voter index

What if the survey includes too many politically engaged people?

One complication with the awarding of a turnout estimate to the survey sample is the fact that election polls tend to overrepresent politically engaged individuals. It may be necessary to apply a college turnout threshold in making a cutoff to business relationship for the fact that a higher per centum of survey respondents than of members of the general public may really plow out to vote. Unfortunately, there is no agreed-upon method of making this aligning, since the extent to which the survey overrepresents the politically engaged, or even changes the respondents' behavior (e.one thousand., by increasing their interest in the election), may vary from report to report and is difficult to estimate.

The data used here include but those who are registered to vote; consequently, the appropriate turnout estimate within this sample should be considerably higher than among the full general public. For many of the simulations presented in this report, we estimated that 60% of registered voters would turn out. Assuming that 70% of adults are registered to vote, this would equate to a prediction of 42% turnout of the general public.v

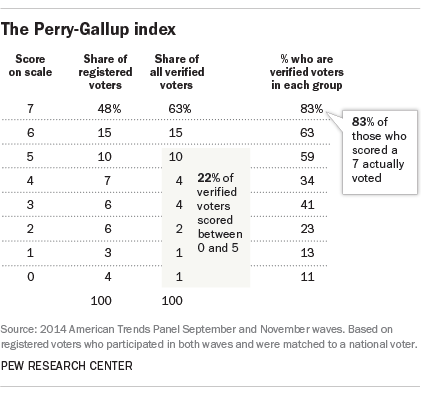

In these data, an expectation of 60% turnout meant that all respondents who scored a 7 on the calibration (48% of the full) would be classified as likely voters, along with a weighted share of those who scored six (who were xv% of the total).

Post-obit the original method developed by Paul Perry, Pew Inquiry Center combines the individual survey items to create a scale that is then used to classify respondents every bit likely voters or nonvoters. For each of the 7 questions, a respondent is given 1 bespeak for selecting sure response categories. For case, a response of "yes" to the question "Take you ever voted in your precinct or ballot commune?" gets 1 bespeak on the scale. Younger respondents are given additional points to account for their inability to vote in the by (respondents who are ages 20-21 get 1 additional point and respondents who are ages 18-nineteen get 2 boosted points).six Additionally, those who say they "definitely will not" exist voting, or who are not registered to vote, are automatically coded as a zero on the scale. As tested hither, the procedure results in an index with values ranging from 0 to seven, with the highest values representing those with the greatest likelihood of voting.

The next step is to make an estimate of the percent of the eligible adults likely to vote in the election. This is typically based on a review of past turnout levels in similar elections, adapted for judgments about the credible level of voter interest in the electric current entrada, the competitiveness of the races and degree of voter mobilization underway. The estimate is used to produce a "cutoff" on the likely voter calibration, selecting the highest-scoring respondents based on the expected turnout in the coming election. For example, if we expected that 40% of the voting eligible population would vote (a typical turnout for a midterm ballot), then we would base of operations our survey estimates on the 40% of the eligible public receiving the highest index scores.vii In reality, 36% of the eligible developed population turned out in 2014. The choice of a turnout threshold is a very important determination because the views of voters and nonvoters are oftentimes very different, every bit was the case in 2014. (Run across Appendix C for data on how the choice of a turnout target matters.)8

Deterministic (or cutoff) methods like this i leave out many actual voters. While those coded half dozen and 7 on the scale are very probable to vote (63% and 83% of each grouping, respectively were validated to accept voted), there also are many actual voters among those who scored below 6: About a 5th (22%) of all verified voters scored betwixt 0 and 5. Of grade, the goal of the model is not to allocate every respondent only to produce an accurate assemblage of the vote. But if the distribution of those correctly classified does not match that of the actual electorate, the ballot forecast volition exist wrong.

Deterministic (or cutoff) methods like this i leave out many actual voters. While those coded half dozen and 7 on the scale are very probable to vote (63% and 83% of each grouping, respectively were validated to accept voted), there also are many actual voters among those who scored below 6: About a 5th (22%) of all verified voters scored betwixt 0 and 5. Of grade, the goal of the model is not to allocate every respondent only to produce an accurate assemblage of the vote. But if the distribution of those correctly classified does not match that of the actual electorate, the ballot forecast volition exist wrong.

Consistent with general patterns observed in previous elections of this blazon, respondents who scored a 7 on the scale favor Republican over Autonomous candidates (by a margin of 50% to 44%). Majorities of those in categories 5 and 6 prefer Democratic candidates. As in most elections, the partisan distribution of the predicted vote depends heavily on where the line is drawn on the likely voter scale. Including more voters usually makes the overall sample more Democratic, especially in off-year elections. That is why judgments about where to apply the cutoff are critical to the accurateness of the method.

Probabilistic models

The same individual survey questions can likewise be used to create a statistical model that assigns a predicted probability of voting to each respondent, along with coefficients that measure out how well each item correlates with turnout. These coefficients tin can then be used in other elections with surveys that ask the same questions to create a predicted probability of voting for each respondent, based on the assumption that expressions of interest, past behavior and intent all have the same impact regardless of the ballot. All response options for each item can be used in the model, or they can be coded every bit they are in the Perry-Gallup method. Regardless of the form of the inputs, the result is a distribution, with each respondent assigned a score on a scale corresponding to how likely he or she is to turn out to vote. If someone is classified equally a 0.30, then that respondent is thought to have a xxx% chance of voting.

One potential benefit to this method is that it tin use more of the information contained in the survey (all of the response categories in each question, rather than just a selected one or two). This also gives respondents who may have a lower likelihood of voting – whether because of their historic period, lack of ongoing interest in the election or simply having missed a past election – a possibility of affecting the consequence, since we know that many who score lower on the scale actually practise vote. These respondents will be counted as long as they accept a chance of voting that is greater than zilch; they are simply given a lower weight in the analysis than others with a higher likelihood of voting.

One potential drawback of this method is that it applies a model developed in a previous election to a current election, based on the assumption that the relationships betwixt turnout and the key predictors are the same across elections. In this study, our models are built using voter participation data from the 2014 elections, and the resulting weights are practical retroactively to produce survey estimates of the likely 2014 vote. Equally a result, we cannot test how well these models would perform in hereafter elections. The likely voter model used by CBS News, which has employed a variation of this method for decades, suggests that such assumptions are reasonable. Rather, our goal is to explore the differences between probabilistic and deterministic approaches to modeling voter turnout, and learn how much these models are improved when we include information on prior voting behavior drawn from the voter file.

In our evaluations of probabilistic models, we too tested a "kitchen sink" model that includes the seven Perry-Gallup measures along with a range of demographic and political variables including age, didactics, income, race/ethnicity, party affiliation, ideological consistency, domicile ownership and length of tenure at current residence – all factors known to exist correlated with voter turnout.

In testing probabilistic approaches, we explored ii methods for creating predicted probabilities: logistic regression, a common modeling tool, and a machine-learning technique known as "random wood."

In addition to using the predicted probabilities as a weight, they can as well be used with a cutoff. As with the Perry-Gallup scale, the cutoff method would count the elevation-scoring respondents every bit likely voters and ignore the others. For case, assuming that sixty% of registered voters are going to turn out, the models would include only the top threescore% of respondents every bit ranked by their predicted probabilities of voting.

Logistic regression

To build a model comparable to the Perry-Gallup seven-item scale, the same 7 questions on voter engagement, past voting behavior, voter intent and knowledge about where to vote were used. (The "kitchen sink" model used these items along with demographic and political variables.) The questions were entered into the model as predictors without combining or collapsing categories. Variables were rescaled to vary between 0 and one, with "don't know" responses coded as aught.

A logistic regression was performed using verified vote from the voter file every bit the dependent variable. The regression produces a predicted probability of voting for each respondent and coefficients for each measure. The probabilities are then used in diverse ways as described below to produce a model of the electorate for forecasting. In subsequent elections, the coefficients derived from these models can be used with the answers from respondents in gimmicky surveys to produce a probability of voting for each person. Equally with the Perry-Gallup approach, this method assumes that the measures used in the study are equally relevant for distinguishing voters from nonvoters in a diversity of elections.

Decision copse and random forests

Another probabilistic approach involves the use of "decision copse" to identify the best configuration of variables to predict a detail outcome – in this case, voting and nonvoting. The typical decision tree analysis identifies various means of splitting a dataset into separate paths or branches, based on options for each variable. The decision tree approach tin be improved using a machine-learning technique known every bit "random forests." Random forests employ big numbers of trees fit to random subsamples of the data in order to provide more precise predictions than would be obtained by fitting a single tree to all of the data. Unlike classical methods for estimating probabilities such as logistic regression, random forests perform well with large numbers of predictor variables and in the presence of circuitous interactions. Nosotros applied the random forest method to the computation of vote probabilities, starting with the aforementioned variables employed in the other methods described earlier.

When a single decision tree is fit to a dataset, the algorithm starts past searching for the value among the predictor variables that tin be used to split the dataset into two groups that are well-nigh homogenous with respect to the issue variable, in this case whether or not someone voted in the 2014 elections. These subgroups are chosen nodes, and the conclusion tree algorithm proceeds to split each node into progressively more and more homogenous groups until a stopping criterion is reached. One matter that makes the random forest technique unique is that prior to splitting each node, the algorithm selects a random subset of the predictor variables to use equally candidates for splitting the data. This has the effect of reducing the correlation between individual copse, which further reduces the variance of the predictions.

When employing statistical models for prediction, it is important to address the possibility that the models are overfitting the information – finding patterns in information that reflect random noise rather than meaningful signal – which reduces their accuracy when practical to other datasets. This is less of a concern for logistic regression, which is unlikely to overfit when the sample size is big relative to the number of independent variables (every bit is the instance here). But it is a concern for powerful machine-learning methods such as random forests that actively seek out patterns in data. 1 advantage of random forests in this regard is the fact that each tree is built using a different random subsample of the data. In our analysis, the predicted probabilities for a case are based only on those trees that were built using subsamples where that instance was excluded. The result is that whatever overfitting that occurs in the tree-building process does not bear over into the scores that are applied to each case.

One last regression-based method tested here is to utilise a voter turnout probability created by the voter file vendor equally a predictor or a weight. The TargetSmart voter file includes a 2014 turnout likelihood score developed past Clarity Campaign Labs. This score ranges from 0 to one and can be interpreted equally a probability of voting in the 2014 general election.

The statistical analysis reported in the next section uses the verified vote as the measure of turnout. Among registered voters in the sample, 63% take a voter file record indicating that they voted in 2014. Self-reported voting was more common; 75% of registered voters said they turned out. Appendix B discusses the pros and cons of using verified vote vs. cocky-reported vote.

Given 7 Registered Voters, What Is The Probability That 2 Of Them Will Vote In The Election?,

Source: https://www.pewresearch.org/methods/2016/01/07/measuring-the-likelihood-to-vote/

Posted by: navarrosurriess1937.blogspot.com

0 Response to "Given 7 Registered Voters, What Is The Probability That 2 Of Them Will Vote In The Election?"

Post a Comment